Most wearables do a great job delivering friendly summaries:

“Your readiness is high today — go work out.”

“Your body needs rest — take it easy.”

They’re clean. Digestible. Reassuring.

But underneath those notifications sits an enormous amount of physiological telemetry — far more than any human could reasonably interpret day to day. And as someone who works with data for a living, I kept coming back to the same question:

Why am I collecting all this data if it’s only being translated into a score and a sentence?

That question turned into a small weekend experiment — one where I put an AI reasoning layer on top of my Oura data and asked it to behave like a real coach.

What I learned had very little to do with wearables — and everything to do with how organizations misunderstand data.

Oura Has the Data. Insight Gets Compressed Away.

I like my Oura Ring. It captures:

- HRV and resting heart rate

- Body temperature deviations

- Sleep stages, latency, efficiency

- Bedtime regularity

- Activity intensity and recovery index

- Daily and weekly patterns

But the app ultimately compresses all of that into:

- A readiness score

- A sleep score

- A push-or-rest suggestion

And I couldn’t shake the feeling that something important was getting lost.

If I’m generating thousands of data points per week, why am I only getting a handful of generic outputs?

That isn’t a data-collection problem.

It’s a translation problem.

The Experiment: Add a Reasoning Layer, Not Another Dashboard

I didn’t want better charts.

I wanted interpretation.

So I built a very small system:

1. Ingest raw Oura telemetry nightly

Using a FastAPI backend, I pull:

- Sleep architecture (REM, deep, latency, efficiency)

- Readiness contributors

- HRV and resting heart rate

- Activity intensity, MET minutes, steps, distance

- Temperature deviations

- Recovery index

- Timing regularity

2. Normalize it into context

Instead of isolated daily numbers, the system builds:

- A one-day snapshot

- A rolling seven-day view

3. Feed the full context into an AI model

Using Python and the ChatGPT API, I ask it to:

- Summarize the day and week

- Identify patterns and anomalies

- Connect activity to recovery and sleep

- Highlight which metrics actually mattered

- Flag risks and weak signals

- Generate a concrete coaching plan

4. Return a single written report

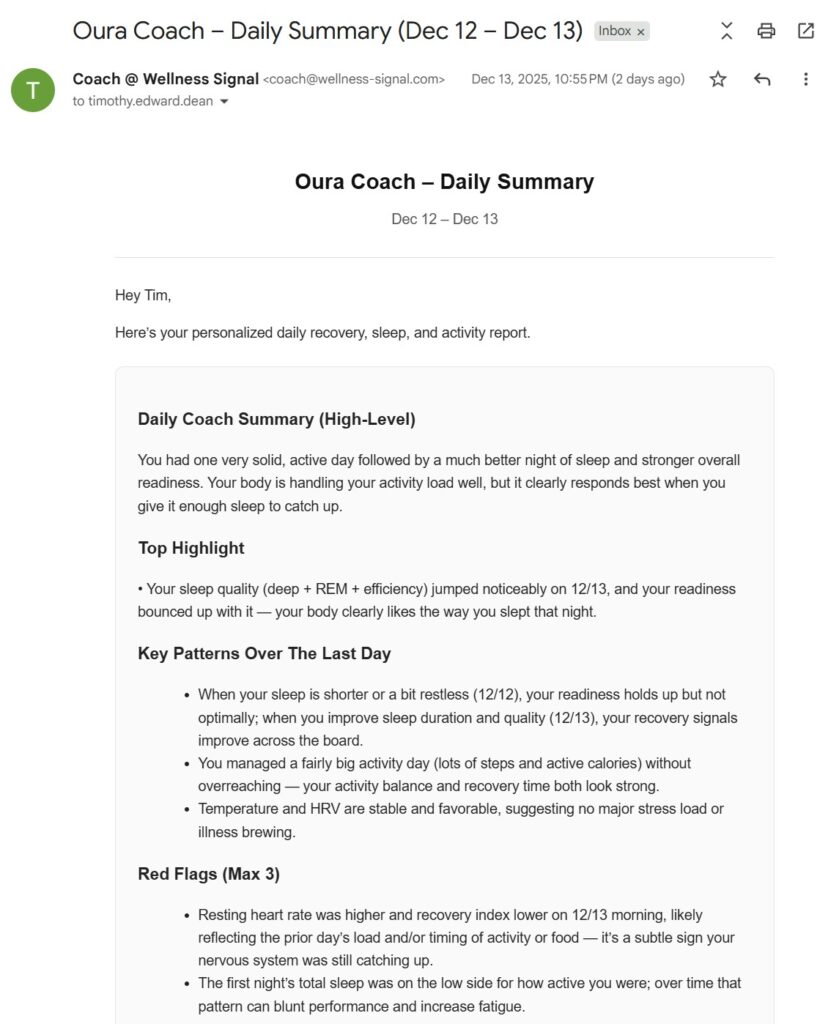

Every morning, I get a daily and weekly narrative (as pictured below).

No UI. No charts. Just markdown.

That’s it.

What the Raw Data Actually Looks Like

Here’s an abbreviated example (the data contains thousands of lines) of the raw JSON my API generates before any interpretation happens:

{

"day": "2025-12-10",

"sleep": {

"contributors": {

"deep_sleep": 96,

"efficiency": 83,

"latency": 97,

"rem_sleep": 99,

"timing": 100

},

"score": 92

},

"readiness": {

"contributors": {

"hrv_balance": 79,

"recovery_index": 100,

"sleep_balance": 77

},

"score": 87

},

"activity": {

"active_calories": 603,

"training_volume": 96

}

}

On its own, this data is useless.

No human wants to parse this, correlate it with behavior, and turn it into a plan — every week, forever.

That’s exactly where reasoning matters.

What the AI Did Differently

What surprised me wasn’t the summary — it was the connections.

Sleep

It noticed that:

- My strongest nights shared nearly identical timing

- My weakest nights followed irregular pre-sleep routines

- Deep and REM sleep stayed strong even when efficiency dipped

- Recovery-index drops aligned with prior-day workload

Activity

It flagged:

- Inconsistent training volume despite high capacity

- Only two days meeting distance targets

- A single intensity spike creating downstream recovery strain

Red Flags

Instead of dozens of metrics, it surfaced:

- Two recovery dips worth paying attention to

- A short run of weak sleep

- Undershooting activity targets across the week

No scrolling. No interpretation fatigue.

Just signal.

The Output That Actually Changed My Behavior

The AI didn’t give me vague advice. It gave me a plan:

- Standardize bedtime within ±30 minutes

- Add two structured interval sessions mid-week

- Raise baseline daily steps (down to knowing I work a desk job)

- Protect sleep on high-load training days

- Insert deliberate low-load recovery days

That’s coaching — not scoring.

And it was immediately more useful than anything I’d gotten from the app.

Why This Has Nothing to Do With Wearables

This experiment reinforced something I see constantly in organizations:

We already have the data.

We just don’t know how to use it.

Every environment I’ve worked in is drowning in telemetry:

- Security logs

- Network performance data

- Clinical throughput metrics

- Financial indicators

- Operational dashboards

Most of it ends up summarized into charts.

Very little of it becomes clear, actionable guidance.

Dashboards don’t create insight.

Interpretation does.

Where AI Actually Fits

AI isn’t valuable because it’s “smart.”

It’s valuable because it can sit on top of telemetry and:

- Reason across time

- Identify what changed

- Explain why it matters

- Suggest what to do next

Not replacing people —

but giving them the context they never have time to assemble.

The pattern is simple:

Telemetry → AI reasoning → action

Once you see it work — even in a small, personal system — it becomes obvious how much value we’re leaving untapped elsewhere.

Final Thought

This started as a personal experiment to get better sleep and recovery insights.

What it really showed me is that insight isn’t about collecting more data — it’s about translating what we already have into decisions we can actually act on.

That’s the layer most systems are missing.

And it’s the layer that’s about to matter a lot.

Leave a Reply